Watch with your smartest loved one...

Then contact me to discuss your next options and "uncover your new class of responsibilities."

David

617-331-7852

david@davidcutler.net

www.DavidCutler.net

617-331-7852

david@davidcutler.net

www.DavidCutler.net

Share This:

https://bit.ly/Virtual_Reality_Check

Outline of section links:

0:49 Introduction: Steve Wozniak Introduces Tristan Harris and Aza Raskin

1:30 Talk begins: The Rubber band effect

3:16 Preface: What does responsible rollout look like?

4:03 Oppenheimer Manhattan project analogy

4:49 Survey results on the probability of human extinction

3 Rules of Technology

5:36 1. New tech, A New Class of Responsibilities

6:42 2. If a Tech confers power, it starts race

6:47 3. If you don't coordinate, the race ends in tragedy

First contact with AI: 'Curation AI' and the Engagement Monster

7:02 First contact moment with curation AI: Unintended consequences

8:22 Second contact with creation AI

8:50 The Engagement Monster: Social media and the race to the bottom

Second contact with AI: 'Creation AI'

11:23 Entanglement of AI with society

12:48 Not here to talk about the AGI apocalypse

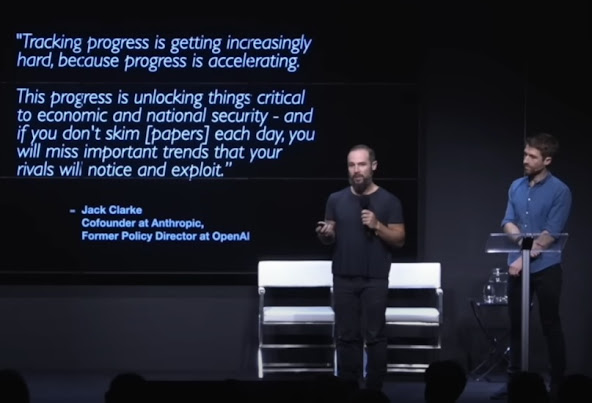

14:13 Understanding the exponential improvement of AI and Machine Learning

15:13 Impact of Language models on AI

Gollem-class AIs

17:09 GLLMM: Generative Large Language Multi-Modal Model (Gollem AIs)

18:12 Multiple Examples: Models demonstrating complex understanding of the world

22:54 Security vulnerability exploits using current AI models, and identity verification concerns

27:34 Total decoding and synthesizing of reality: 2024 will be the last human election

Emergent Capabilities of GLLMMs:

29:55 Sudden breakthroughs in multiple fields and theory of mind

33:03 Potential shortcoming of current alignment methods against a sufficiently advanced AI

34:50 Gollem-class AI can make themselves stronger AI can feed itself

37:53 Nukes don't make stronger nukes: AI makes stronger AI

38:40 Exponentials are difficult to understand

39:58 AI is beating tests as fast as they are made

Race to deploy AI

42:01 Potential harms of 2nd contact AI

43:50 AlphaPersuade

44:51 Race to intimacy

46:03 At least we're slowly deploying Gollems to the public to test it safely?

47:07 But we would never actively put this in front of our children?

49:30 But at least there are lots of safety researchers?

50:23 At least the smartest AI safety people think there's a way to do it safely?

51:21 Pause, take a breath

How do we choose the future we want?

51:43 Challenge of talking about AI

52:45 We can still choose the future we want

53:51 Success moments against existential challenges

56:18 Don't onboard humanity onto the plane without democratic dialogue

58:40 We can selectively slow down the public deployment of GLLMM AIs

59:10 Presume public deployments are unsafe

59:48 But won't we just lose to China?

How do we close the gap?

1:02:28 What else can we do to close the gap between what is happening and what needs to happen?

1:03:30 Even bigger AI developments are coming. And faster.

1:03:54 Let's not make the same mistake we made with social media

1:03:54 Recap and Call to action